A little bit on the projects I've completed

Case Studies

Table of Contents

- 2020: Design Thinking at CSL Behring

- 2018: Self-Service Analytics for FinTech

- 2018: Why we do Discovery Engagements

- 2018: Enabling Data Analytics in FinServ

- 2018: How I Manage Blockchain Projects

- 2018: How to Manage a Successful Customer 360 Project

- 2018: Avoiding EDW Project Failure

- 2017: Scale Your Data Science Team

- 2017: CPG company wants to integrate SAP HANA using Power BI

- 2017: A FinServ Wants to Create a Customer360 Solution

- 2017: A FinTech Wants to Offload Hadoop Processes to Azure

- 2017: An IoT Company Wants a Hybrid Cloud Cloudera Solution

- 2017: Hortonworks DR Solution Becomes an HDInsight Migration

- 2016: A CPG Company Uses IoT and Machine Learning to Solve a Manufacturing Problem

- 2015: I Build a Successful Hadoop Managed Service Offering at a Managed Hosting Provider

- 2015: Using Data Science and Hive to create a just-in-time analytics solution

- 2014: True Digital Transformation with an Innovative Data Lake

- 2007: My First Mobile Development Project

This page is devoted to summaries of my most interesting engagements. It’s not an exhaustive list. Writing a retrospective helps me understand what went right, what went wrong, and what I could’ve done better. I find Case Studies to be far more interesting of a job candidates true abilities than an exhaustive skills listing on most resumes I see.

Engage with me for your next assignment ›

2020: Design Thinking at CSL Behring

CSL Behring won a Forrester Award for their innovations that came as a direct result of Design Thinking sessions I hosted at the MTC. Read more. Design Thinking sessions are a great way for IT and lines-of-business to understand the Art of the Possible and design prototypes quickly and collaboratively.

2018: Self-Service Analytics for FinTech

Our FinTech client wanted to provide true self-service analytics to their customers. This is a huge application for a big FinTech with a lot of customers. We needed to start small and show value quickly to win the long-term engagement. Click here to learn about the busines problem and how I decided what to focus our efforts on to show value quickly.

2018: Why we do Discovery Engagements

Before embarking on a long-term engagement with a new client I insist on doing a short-term Discovery Engagement. You might’ve heard about these or experienced a typical version on your last project. I assure you, my Discovery Engagements are not typical. Let me show you why that is. Some clients balk at doing these engagements, stating things like, “You already bid on the RFC, just go do the work” and “I don’t have time to have my staff tied up in meetings for a week”. If that is your experience with Discovery Engagements, I’m sorry. These engagements are in your best interest as my client. In this case study I’ll show you how GOOD Discovery Engagements should work. You can even use my handouts and IP to run your next internal Project Kickoff in a more meaningful way.

2018: Enabling Data Analytics in FinServ

Financial services clients are my favorite clients. I love finance and investing and this industry is constantly innovating. It’s choke full of Digital Disrupters. I just completed an engagement where the customer knew WHAT they wanted to do (read on) but stumbled a few times making the project a success. The customer needed to get better data into the hands of their data scientists faster. Read about what I did to help them move quickly and iterate on their idea.

2018: How I Manage Blockchain Projects

I’ve run a few small blockchain experiments for clients. Read about what I’ve learned that may help you avoid some blockchain project pitfalls. These are not traditional development projects. In these case studies I’ll show you what I learned.

2018: How to Manage a Successful Customer 360 Project

I’ve managed a few Customer 360 engagements for clients. Read about these engagements and how to increase your odds of success. Not sure where to get started? I’ll show you a few use cases that are invaluable and actually can be implemented in about a month.

2018: Avoiding EDW Project Failure

Any data project, but especially enterprise data warehouse projects, are prone to failure. There are two main reasons:

- Data modeling: determining the right format to persist your data, before you actually report off of that data, generally takes weeks. And you’ll find the models always need to be reworked.

- Data movement: Any time you need to move data you need to write expensive ETL code. And that ETL code needs to be adjusted when the models are adjusted.

I avoid both of those problems by deferring them until late in the project. It’s much easier to do data sandboxing and discovery and then generate the reports, dashboards, and ML algorithms first, then create the models and ETL to support them. When clients run data projects this way they have a much higher likelihood of success.

I’ve written a few articles and whitepapers on how I handle this projects. You can read about them here. You can even view the webinar where I demonstrate the process I follow.

2017: Scale Your Data Science Team

I wrote an entire blog post on just data science case studies.

2017: CPG company wants to integrate SAP HANA using Power BI

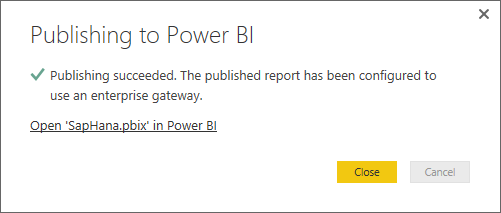

PBI Online provides several out-of-the-box analytics tooling that aren’t available in on-prem solutions. Our customer wanted to utilize the Natural Language Processing abilities of PBI Online to spot marketplace trends for better sell-through, all without coding NLP themselves. We started with a simple solution; install a PBI Gateway and wire-up PBI Online to the HANA data which resides in their local data center. This worked well, for a little while.

The problem became that the HANA data is updated infrequently, only a few days per quarter. But we quickly learned that on those days the data could be updated many times PER HOUR as regression models and What If analysis changed. HANA was responsible for all data updates and PBI Online would “see” those data changes in DirectQuery mode, but unfortunately benchmarking showed that could be delayed by up to 15 minutes. This latency was unacceptable to the customer.

Updating data was handled by HANA, the data simply needed to be replicated into Azure where PBI Online could access the data more quickly without the latency. The data was only refreshed for a few days every “season” of the year, but during those days the HANA data could be reprocessed many times, generating new dimensions and measures. PBI Online could not poll for new data from HANA quickly enough to make the user experience acceptable. My company has standard real-time data movement technologies that we implemented to make the user experience better by providing more real-time information. The result of this effort allowed HANA to remain the system of record for this data while minimizing latency with the Power BI-based reports.

Updating data was handled by HANA, the data simply needed to be replicated into Azure where PBI Online could access the data more quickly without the latency. The data was only refreshed for a few days every “season” of the year, but during those days the HANA data could be reprocessed many times, generating new dimensions and measures. PBI Online could not poll for new data from HANA quickly enough to make the user experience acceptable. My company has standard real-time data movement technologies that we implemented to make the user experience better by providing more real-time information. The result of this effort allowed HANA to remain the system of record for this data while minimizing latency with the Power BI-based reports.

2017: A FinServ Wants to Create a Customer360 Solution

I was engaged by a FinServ that provides receivables funding to small businesses. This is akin to “payday loans”…the industry has a negative connotation but receivables funding is a valued service for seasonal businesses that rely on short-term loans to make payroll. These companies don’t mind paying usurious rates for short time periods. The problem is determining which companies are good credit risks and which are not.

The obvious answer is “we need a heavy dose of Machine Learning”, but this company already did all of that.

What they were really interested in doing is enabling their call center reps to understand the customer faster, without having to query 3-5 systems and applications to get the 360 degree view of the customer.

Like most companies they started by trying to use Salesforce as the data integration tool. It’s very expensive to ETL data into Salesforce and then have developers write the necessary code to integrate all of that information in the very constraining Salesforce UI. After 6 months the company abandoned the project seeing that it would be years until the solution was delivered.

My Solution

The company engaged with me based on webinars I have given on Fast Customer360 solutions. They wanted to do a 3 month PoC to see if my process would work for them.

My solution is to build a “System 1 Dashboard” of all relevant customer data at the time the customer calls the call center. All without ETL’ing any data. When I do this I start by creating an API for the dashboard running in The Cloud. That API link is embedded into the Salesforce UI. When a rep clicks the link the dashboard is launched with the relevant customer KPIs. This eliminates all of the coding effort needed in Salesforce.

My solution is to build a “System 1 Dashboard” of all relevant customer data at the time the customer calls the call center. All without ETL’ing any data. When I do this I start by creating an API for the dashboard running in The Cloud. That API link is embedded into the Salesforce UI. When a rep clicks the link the dashboard is launched with the relevant customer KPIs. This eliminates all of the coding effort needed in Salesforce.

Next we need to integrate source system data. The company had 5 major systems they wanted to integrate to get a near perfect Customer 360 solution. Each of those systems were third-party off-prem (cloud) systems with well-defined APIs providing up-to-the-minute data. At this point MY API called the 3rd party APIs and provided some bs4 and react code to format the data nicely.

We could now add the code necessary to calculate the most important KPIs and device on-the-fly scripts for the reps to use during the interactions.

We could now add the code necessary to calculate the most important KPIs and device on-the-fly scripts for the reps to use during the interactions.

This was Phase 1 of the project and it was completed in MVP form in 3 months with 5 dev resources. Phase 2 took another 3 months and involved landing the external data in a data lake using Kappa Architecture. We could then build the “predictive” analytics of a really great Customer 360 solution.

In summary, the customer was thrilled to be able to deliver quick wins with small bets. Many times during the 6 months we changed focused when we realized there were more valuable use cases that should be prioritized. This is the true benefit of the cloud.

Contact me for more information ›

2017: A FinTech Wants to Offload Hadoop Processes to Azure

My company was engaged by a FinTech with a small Cloudera on-prem environment. The client was quickly outgrowing their 12-node cluster and were looking at alternatives to adding existing nodes in their co-lo environment.

“We want to get out of the data center business”

–Almost every customer we engage with lately

We started the engagement by working with the customer to determine what workloads the cluster was currently handling.

- Ingest (via Kafka) and land into an HDFS-based data lake data to support “Customer360” analytics.

- EDW-like queries and analytics workloads using Kudu and Impala.

Like with most on-prem Hadoop installations, storage was growing out-of-control but compute resources were barely used. The typical HDFS Replication Factor meant that for every new GB of data ingested the client needed to allocate 3GB of HDFS DAS storage.

The Solution

The solution was a hybrid cloud Cloudera installation on Azure using ADLS. Most data was “cold” and did not require HDFS or Kudu. But it needed to be maintained. That data was pushed to ADLS and a  small CDH cluster in Azure could read that data when required. Implementation time was less than 3 weeks with only a small effort to refactor Impala code against ADLS.

small CDH cluster in Azure could read that data when required. Implementation time was less than 3 weeks with only a small effort to refactor Impala code against ADLS.

While this ameliorated short term pain we began to realize that landing cloud-born System of Record data, such as Salesforce and credit reporting agency data, on-prem, was not ideal. There was no good reason to clog on-prem internet pipes with data that would ultimately be viewable on a WebApp hosted in Azure anyway. It was also unnecessary to run 6 nodes just to support Kafka. A slight refactoring of data ingestion code allowed the customer to use EventHubs instead of Kafka and eliminate on-prem kafka scale-out.

In summary, the customer was thrilled to be able to offload non-analytics traffic from their on-prem Cloudera investment. Our solution was low-risk and allowed them to seamlessly shift future workloads from on-prem to cloud, and back.

2017: An IoT Company Wants a Hybrid Cloud Cloudera Solution

We were engaged by a manufacturer of IoT inventory solutions to replicate their AWS Cloudera environment in Azure. A number of customers wanted to use this manufacturer’s IoT solutions but were uncomfortable having their biggest competitor being involved with their infrastructure. This manufacturer had no Azure presence or expertise and looked to us to build the entire Azure solution as quickly as possible.

It’s common for large retailers to demand that their data is NOT processed using AWS. Retailers don’t want their largest competitor being their infrastructure provider. This is a good thing. Putting all your eggs into one public cloud provider is not smart. Having a hybrid cloud strategy should be a MUST for any company with a public cloud presence.

The first step was to build the Azure footprint. We examined the customer’s CloudFormation scripts and replicated the setup in Azure using analog services. This was a basic lift-and-shift, until it came to standing up the Cloudera solution. To save costs the customer used S3 storage in lieu of HDFS. The simple solution would be to swap out S3 for WASB storage.

But instead, we took a different approach and leverage ADLS as the persistence tier. It’s slightly more work to wireup ADLS to Cloudera, and the customer didn’t see the benefit…at first. One of the key complaints customers had with the manufacturer’s solution was the Spotfire reports. They were not customizable and were not real-time. But, with the data now in ADLS, this opened an opportunity to build custom dashboards using U-SQL and Power BI.

The customer was impressed. By using ADLS/A the customer was able to offload processing power, enabling them to shrink the CDH footprint.

The Solution

Within 3 weeks we had the Azure solution online and processing data. We began building new PBI  dashboards soon after that. The customer is now truly hybrid cloud. What was supposed to be a solution for a handful of customers that did not want their data in AWS became an “Enterprise” offering worthy of a premium surcharge for customers that wanted U-SQL and Power BI reporting.

dashboards soon after that. The customer is now truly hybrid cloud. What was supposed to be a solution for a handful of customers that did not want their data in AWS became an “Enterprise” offering worthy of a premium surcharge for customers that wanted U-SQL and Power BI reporting.

We are currently evaluating Altus (“managed Cloudera”) in Azure to further contain costs and the operations burden of running Hadoop.

2017: Hortonworks DR Solution Becomes an HDInsight Migration

A CPG company had a growing on-prem Hortonworks HDP cluster and a requirement for HDFS Disaster Recovery. The customer had some departmental WebApps in Azure but realized the cloud was a perfect DR tool for their HDFS data. My company was engaged to assist in an automated HDFS DR solution. We have a number of OSS tools we use to do on-prem to cloud HDFS migrations that work equally well for DR.

We started with a small engagement to determine the size of data and use cases before recommending a solution. We soon discovered that the on-prem HDP solution was not optimally configured, was not maintained, and needed to be upgraded. The company did not have any in-houses Hadoop or Linux Admins. Cluster usage grew organically from business users over a 3 year period, but was not maintained by IT. Kafka was running on a single node and 2 of the zookeeper nodes had bad configurations. The loss of a single worker node would take the cluster down with guaranteed data loss.

The Solution

We stopped the bleeding and fixed the HDP cluster to avoid immediate catastrophe. We then used our tooling to replicate the “hot” data in near real-time to ADLS. ADLS has a much better TCO for largely cold data with simple query and ingestion use cases. We wired up ADLS both on-prem and using a small HDInsight cluster in Azure.

As with most of these projects we quickly found additional use cases for elastic Hadoop processing that the customer didn’t already realize. CPG companies are “seasonal” and their analytics needs spike for a few days during the next season’s planning, but then drop off dramatically. Elasticity is impossible to achieve using on-prem Hadoop solutions. We migrated the seasonal workloads to the HDI cluster and scaled that up for the few days the compute power was needed per cycle.

As with most of these projects we quickly found additional use cases for elastic Hadoop processing that the customer didn’t already realize. CPG companies are “seasonal” and their analytics needs spike for a few days during the next season’s planning, but then drop off dramatically. Elasticity is impossible to achieve using on-prem Hadoop solutions. We migrated the seasonal workloads to the HDI cluster and scaled that up for the few days the compute power was needed per cycle.

We are currently evaluating with the customer moving additional workloads to HDInsight. This customer is actively looking to get out of the data center business and does not want to hire Linux and Hadoop talent. Azure and HDInsight make a compelling offering.

2016: A CPG Company Uses IoT and Machine Learning to Solve a Manufacturing Problem

As a MSFT Solution Architect I had the pleasure of working with large companies solving their trickiest problems. Hershey makes Twizzlers licorice in Hershey, PA. The product is sold by weight, not by volume, but the packaging really only looks right if the volume in the package is “just right”. If the mix is a bit “too wet” then the product appears smaller in the packaging. If the mix is “too dry” not enough of the product makes it into the packaging. Hershey has a complicated manufacturing setup with telemetry sensors built-in. The goal is to be able to use the telemetry in a predictive manner to determine if the mix will be too wet or too dry and to adjust it accordingly.

The Solution: Fast time-to-market with Azure ML

AzureML is able to consume the telemetry data in real-time and send command-and-control back to the devices in some cases, or minimally to the assembly line staff to make adjustments to the line in real-time. This eliminates wastage since packages that don’t “look right” or don’t have the correct weight need to be discarded.

Here is a video of the solution (go to 0:40):

2015: I Build a Successful Hadoop Managed Service Offering at a Managed Hosting Provider

A huge online ad analytics provider engaged with a MHP (Managed Hosting Provider) to outsource their Hadoop administration. The only problem…the MHP had no experience doing this and their client was a very demanding customer. So they asked me to help them stabilize their client’s environment and then teach their people how to support the client long term.

Learning a customer’s Hadoop implementation and then documenting it for long term support is not a difficult process. Teaching Hadoop and general Linux administration to a MHP staff that really only knows Windows…that’s a bit more difficult.

Learning a customer’s Hadoop implementation and then documenting it for long term support is not a difficult process. Teaching Hadoop and general Linux administration to a MHP staff that really only knows Windows…that’s a bit more difficult.

But honestly, how difficult is Hadoop and Linux administration? If you can properly document the common issues and instruct the support folks to call you with any issues, and then give them rudimentary training on ssh, jumphosts, and bash’s history command…there isn’t much that can’t be done. The biggest problem is psychological…convincing a Windows administrator that bash is not impossible with a bit of training.

With a 4 hour training class I was able to teach the MHP support personnel enough information that they were closing 85% of tickets without escalation within a month. Those are unheard of metrics in this industry.

Taking Hadoop Managed Services to the next level

But the story gets better. The client was ecstatic. Uptime improved. Time-to-resolution was better than ever. The client gave the MHP responsibilities to manage their other Hadoop environments in Europe and Hong Kong. The MHP was not the hoster for those regional data centers, but we were so good at the MSP work that we were given access to those environments.

One day I was walking through the office and a Sales Guy called me in. He congratulated me and then asked me if we could provide similar Hadoop MSP services to two of his other hosting customers.

Absolutely!! I began working with his customers. Building an MSP practice is not difficult, regardless of the technology. I follow this process.

- shore up existing processes

- document the customer-specific implementation issues

- begin training the L1/L2 support to handle basic Linux/Hadoop issues

- ensure that L1/L2 is fully comfortable with escalation processes.

- Install a culture of continuous improvement in your staff. Don’t try to make the process perfect at first. Just make it workable. Always look for opportunities to make the process better.

I can train your existing staff to support your Hadoop installation

Many companies outsource their Hadoop administration. This really isn’t necessary if you have support staff already. Windows admins and DBAs can be taught Hadoop administration in short order.

We are living in a new “Public Cloud” era where you don’t just manage technology, rather, you provision services to solve business needs. Hadoop administration is seen as something mystical that requires Highly Paid Contractors. It’s not that difficult.

My advice is to take your existing support staff and look at ways to get them the skills they need to work in this new era. Most IT personnel are embracing this movement and want to learn new skills and broaden their horizons.

2015: Using Data Science and Hive to create a just-in-time analytics solution

My consulting firm was asked by a major drug manufacturer to determine how to boost sales of a drug before it came off patent. The pharma company engaged their internal data teams and various consultancies and each basically said something like:

- this will be a 9-12 month engagement

- needing a data warehouse/mart to capture prescription data

- and a dashboarding tool to report the answer

But the primary requirement was: Tell us why the drug is being prescribed but is not being filled by the specialty pharmacies. Of note: this is a VERY EXPENSIVE drug that CURES a debillitating disease. You may be thinking that insurance companies wouldn’t want to pay for an expensive drug like this. Not true. The disease is so expensive to treat for a lifetime that the insurers would rather just pay a high fee for a guaranteed cure.

Seeing that “time-to-market” requirement you can see why the business leaders weren’t happy with a 0-12 month engagement.

My Role

I was brought in because I have unorthodox approaches to data projects. I proposed building a data sandbox (a data lake, but much smaller since the data volumes are tiny) and have a data scientist from the manufacturer work with me to find the answers.

I preach that we should never spend massive amounts of time up-front doing modeling and ETL development if we are unsure we don’t know the answer we have been asked to find. It’s better to experiment and build data sandboxes before we invest in data modeling and ETL.

I told them I honestly had no idea how long this project would take or if it would even be successful. But I told them we could have weekly touchbase calls and we would discuss our progress and my client could scrap the project at any time.

My Solution

At the time the current project team was writing ETL specifications to give to the specialty pharmas. This was a lengthy data dictionary with a lot of contractual language.

I scrapped this immediately.

Instead, we got the basic legalese out of the way and told the specialty pharmas to give us their rawest, most dirtiest data they had. And give it to us as fast and often as they could. This caused a lot of agita. Our industry assumes there will always be a data dictionary with defined terms and field layouts in known data formats like csv.

Instead, we got the basic legalese out of the way and told the specialty pharmas to give us their rawest, most dirtiest data they had. And give it to us as fast and often as they could. This caused a lot of agita. Our industry assumes there will always be a data dictionary with defined terms and field layouts in known data formats like csv.

I don’t care about that.

Let’s face it. A specialty pharma likely doesn’t have a top notch ETL developer on staff. But they’ll likely have a DBA that can drop their database (minus the PII and HIPAA stuff) to csvs and send it to me. And I can massage that data faster than any ETL developer.

After the contractual stuff was out of the way it took about a month before we started getting the rawest, dirtiest data. Again, this isn’t a lot of data, so why bother spending ANY time loading it into a database? I can use Hive and Drill to query the data in the flat files. And that’s what we did. I sat with the data scientist and we iterated over the data we were given. We looked for trends. We spotted dirty data we had no hypothesis about. We went back to the specialty pharmas and asked them why we saw these things.

After 3 weeks we had a working hypothesis as to why the presciptions were written but the specialty pharmas weren’t filling them. We even had some novel approaches to incentivize them to fill them faster. We presented our findings to the project sponsors and they were ecstatic.

Here’s what’s really interesting…I told them I now needed some time to build a proper data model, proper dashboards and reports, etc. I told them I needed 3 months to load the data up into a SQL Server. But the customer didn’t need ANY of these things…they had their answer, the project was over.

So…I ended up losing a very lucrative (and fun) contract and a prestigious pharma manufacturer. But I had a happy customer that got the answer to their problem in about 2 months.

2014: True Digital Transformation with an Innovative Data Lake

I consulted for a set-top box data provider (if you have a cable box that shows channel guide data, you probably used this company’s data). For close to 40 years this company provided what’s on TV data in one form or another. This is a one way data business…the data goes out and the company never got any telemetry back regarding that data.

All of that changed with the advent of “smart TVs”. A Smart TV manufacturer approached my client to provide not just the data to the TV but to collect telemetry from the viewer. We were contracted to write a series of Web APIs to capture things like

- when was the TV turned off/on

- when was the volume changed

- when was the channel changed

All of this data could be correlated with what was being viewed. The manufacturer, and my client, realized they had a goldmine on their hands. So we were soon asked to provide reports and dashboards that could be sold to Madison Avenue.

The problem is…we created those artifacts and no one bought them.

My Idea

The implicit assumption is we knew what reports and data Madison Avenue really wanted. We didn’t. Instead, my idea was to create this nascent thing called a “data lake”. At the time data lakes were not ubiquitous and Hadoop was still  somewhat unknown. The simple solution was to land the data in real-time to the “landing” area of the data lake. Then nightly we could aggregsate the data to another area. We would document the entire data lake and provide a subscription service to marketers. We allowed customers to copy the data from the data lake if they liked, or we provided a nominal amount of storage space in HDFS for users to write their own Hive queries.

somewhat unknown. The simple solution was to land the data in real-time to the “landing” area of the data lake. Then nightly we could aggregsate the data to another area. We would document the entire data lake and provide a subscription service to marketers. We allowed customers to copy the data from the data lake if they liked, or we provided a nominal amount of storage space in HDFS for users to write their own Hive queries.

By doing that we now had telemetry into what customers were doing with the data lake data. We could further semantically enrich the data for them (for a fee) and we had insight into data we weren’t yet capturing but we knew would be invaluable.

The Digital Transformation

Digital Transformation is a buzzword that most people can’t actually describe.

I can.

The Digital Transformation is not just about making your business a digital business. It’s about generating revenue from data that you have but have never monetized. For me, companies can monetize their data and it’s often more profitable than their underlying Line of Business.

The data lake we built was soon generating more revenue for the Smart TV manufacturer than the actual sales of the hardware. That is the Digital Transformation.

But the story doesn’t end there. Since my client is a provider of “one-way set-top box schedule data” they now had a new offering for all of their other customers – the ability to quickly add telemetry capture and data lake analytics.

2007: My First Mobile Development Project

A Construction ISV Uses Mobile to Transform Its Industry

I was hired at a construction ISV during the Great Recession of 2017-2018. Moving into that industry when construction companies were filing for bankruptcy daily seems like a poor choice. But Maxwell Systems knew that the timing was right to make investments in the future. Since the mid-1970’s Maxwell offered the same accounting and estimating software packages rewritten every 10 years to “modernize the platform”. First mainframe, then DOS, then Win32. Maxwell wanted to truly revolutionize the industry with this offering. They brought me on board to add novel spins to software that really hasn’t seen any innovation in 30 years.

Accounting software has seen very little innovation in 30 years. Customers have no compelling reason to upgrade.

Finding the Problems and Innovation Opportunities

I started by visiting jobsites where our software was used. User pain was immediately evident. Site managers sat in trailers in the middle of nowhere dealing with on-site payroll issues and time tracking. This was 2008 before the ubiquity of wireless networks and 3G.

Jobsite time tracking is easy to solve with a smartphone that can snap a photo of a QR code. Site managers had iphones and I wrote a small application to read the QR codes, construct an XML file, and upload it to the database via USB cables. We were the first company to offer time tracking without expensive scanner hardware.

The Ipad Brings Even More Opportunities

During this time the first-gen iPad was introduced, only available on the AT&T network. At the time AT&T was concerned that this new computing device would swamp its data network so they put severe limitations on data transfers. Ipads were $900 and the market was not ready for these devices. Apple was very vigilant about iOS apps going through strict QA processes and it usually took 3 weeks to get a new version of the application into the AppStore. None of this deterred me.

I created a tablet version of our application with a UI driven entirely out of XML that was downloaded from our servers. This allowed me to move quickly. Our software retailed at $25,000 and even with improved time tracking the impetus for a customer to switch to our software was not compelling. We branded iPad covers with our logos and provided one iPad to any new customer. At the Las Vegas Builders Show in 2008 we sold more net new seats than ever before since every customer really wanted a new iPad (mostly, for personal use).

This was just the beginning. Soon our developers were focused entirely on the mobile platform. Customers loved our product.

Dave Wentzel CONTENT

case studies