Case Study: Data Analytics in FinServ

“We have some great ideas that will revolutionize our industry. Can you help us scale?”

This is a case study for a recent FinServ client that wanted to move quickly with their data product offering. This particular customer did all of the common things I see when embarking on a data project:

- take a few sprints to gather requirements

- take a few more sprints to look at the data that already exists in the ecosystem

- take a sprint to ingest new data sources to fill the gaps

- take a few more sprints to model the data

- take quite a few more sprints to ETL the data

Here’s the problems with that approach:

- that looks to be about a year. Definitionally, that’s not

agile- the longer a project takes the more risk management has to occur

- that’s an expensive looking capital project. And, those tend to have high fail rates

- business priorities change

So, how do we move faster.

Step 1: Understand the WHATs

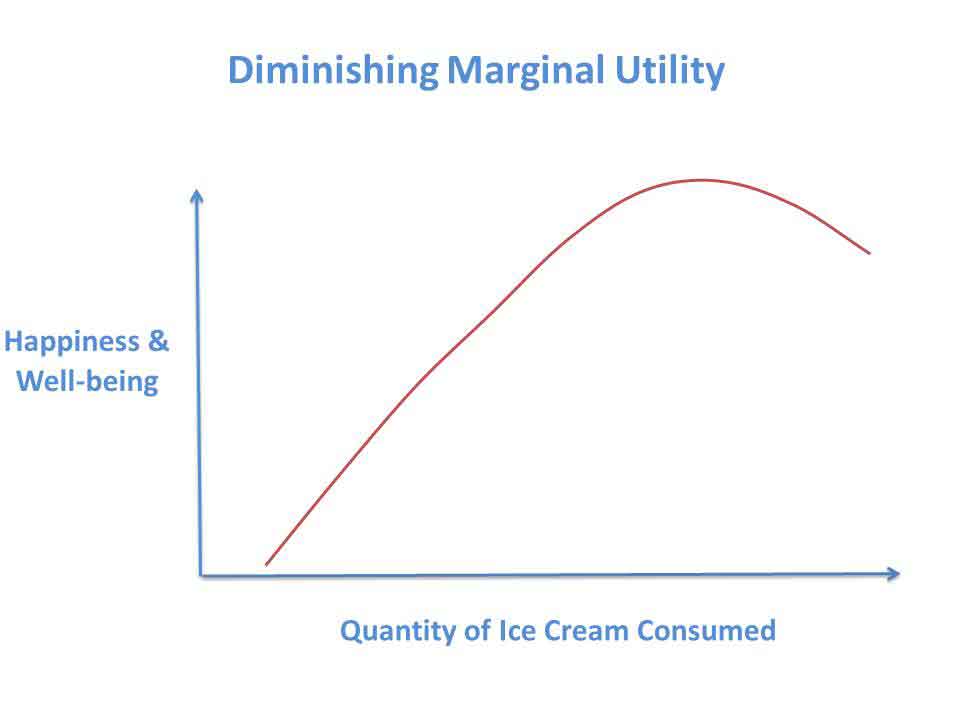

I took just a few days to learn about my client’s business, its current data ecosystem, and the functional requirements of the project. Just a few days. That’s key. Any more than that and I find we bump up against The Law of Diminishing Marginal Utility.  Conversations begin to devolve into “why this project can’t succeed” or worse, conversations around HOW we’ve done the project in the past and WHY it failed. Said differently, this is classic

Conversations begin to devolve into “why this project can’t succeed” or worse, conversations around HOW we’ve done the project in the past and WHY it failed. Said differently, this is classic analysis paralysis. There’s NO WAY I’m going to learn everything I need to know in a couple days. But invariably the customer comes away from this Discovery Workshops with their own homework assignments. They realize they have some known unknowns.

Dave’s 3rd Law of Product Management: Others may have Know How, I like to focus on the Know When, Know Why and Know What.

Quick Summary of the Problem

Everyone is doing consumer loans, payday loans, and extending consumer credit. To do that requires credit risk management which requires researching the credit worthiness and background of the applicant. The default risk is borne by the creditor. This is a time-consuming process that many other FinServs are solving.

Instead, what if we mitigated some of that risk by tying our credit products to other consumer financial products such that other parties carried a larger credit risk burden. At the same time we make our product attractive to the consumer. And sticky.

That sounds vague, I know, but I want to focus on how I help solve the problem without getting mired in the problem.

Step 2: Do we have the data we need?

This requires some deeper analysis. For this client it took a week. We did not have the data we needed. What data do we need for something like this:

- something I call a

Risk Management Warehouse. A project like this is riskier than most. Will regulators approve of this? Will internal risk mitigation processes approve? We need a platform that can address these needs. These projects aren’t terribly difficult to do if we are agile. At the beginning we simply know we NEED this, but we don’t exactly know what comprises it. We will learn that as we go along. - a way to store omni-channel data about our customers. I call this Customer 360 and I’ve written about this before.

My client already had this, but had some gaps. We quickly wrote up what we needed and gave it to that team and they ran with that project.

My client already had this, but had some gaps. We quickly wrote up what we needed and gave it to that team and they ran with that project. Customer Sentiment Analysis: We need a way to capture what our customers are saying about our product. We also use this as aDemand Signal Repository(I’ll write about what this is in the future) which is just a way to determine what the marketplace NEED is and how we can adjust our offerings to fit that need. My client had the trappings of a good solution for this already, we again merely needed to augment their existing IP.Customer Segmentation Analytics: FinServ, traditionally, has focused on a product-based approach to their offerings. But this is a one size fits none approach. Instead, we want a customer-centric approach to products. We want our good customers to keep coming back. We want our bad customers to become good customers. My client had this already for other projects. We can leverage this to make smarter decisions.

Let me be honest. I’m not an expert in DSRs, customer segmentation, or risk management. But I don’t have to be. That’s my customer’s business. I just need to be an attentive, thoughtful listener. I’m listening for gaps that I can solve with my knowledge of data.

Step 3: Ingest Missing Data…Quickly

After we identify data gaps we need to identify where we can obtain that data. This is ETL, or frankly, if it’s done right, is ELT or Kappa Architecture. Don’t worry too much about HOW we do this, just understand that ingesting data should be an HOURS to DAYS process, not a WEEKS to MONTHS effort. And that’s traditionally how long it takes to ingest a new data source. That’s unacceptable.

To ingest what we needed took about a month. This was longer than I would’ve liked, but we learned valuable lessons about skillsets and capabilities. We had to make some adjustments to our expectations going forward. Data projects never go as planned unfortunately.

Step 4: Self-Service Analytics

Once the data is ingested we needed to find the nuggets of gold. Primarily this particular data project focused on:

- determining the algorithm to make credit offer extension decisions

- my client has excellent data scientists on staff. They know FinServ. They know their data. They just needed help with

feature engineeringanddata wrangling. I’ve written case studies on exactly HOW I scale a data science team.

- my client has excellent data scientists on staff. They know FinServ. They know their data. They just needed help with

- using those data sources noted above to determine what the correct offerings are.

- helping analysts combine and join the data in ways they couldn’t in the past.

How?

Self-service data is more practical with a data lake than a data warehouse. To be effective you need a good search and query capabilities in your lake. This means that data and metadata needs to be cataloged.

Notice I didn’t say you need Hadoop clusters or expensive cloud data lake technologies. What I propose are concepts that can be applied quickly using whatever technologies a customer wants to use. Yes, I prefer to use the public cloud because I can provision infrastructure in HOURS instead of WEEKS, but this isn’t always feasible, especially with FinServ.

Self-service analytics is an on-going process. To merely get the data science and analytics team trained-up to do self-service analytics using data lake methods took about 2 weeks before they were confident that they could do the basics unassisted. Frankly, assistance will always be needed with complex SQL queries, data governance, data lineage, and pipeline operationalization. The goal is to get my client’s staff able to do what they NEED by themselves. What they NEED is access to data in a sandbox where they can explore.

Thinking in terms of Data Lakes and Big Data isn’t a technical shift. It’s cultural. I’ve seen clients with awesome data scientists and analysts, but they weren’t able to share their results with other teams to really disrupt their business. Their work is screaming for attention. Data lakes, Kappa Architecture, and data sandboxing are important, but the efforts only pay off when you close the loop and provide the data to the people who need the insights.

Step 4a: Operationalization

Pretty soon these projects begin to attract attention. It’ now when we think about the Ops concerns with our project. We need to have discussions around security and data governance and how we do DevOps/AIOps.

I call these “non-functional” requirements and we address these as the project progresses.

Aside on Data Governance

Many people disagree with me and many times I am wrong, but I like to consider doing a very light-touch data governance program. This goes contrary to industry conventional wisdom. Why do I advocate this? A data lake by definition is exploratory. We are trying to break the rules and find interesting data. If we ensure we don’t ingest things like SSNs and PII data in the sandbox area of the lake then we should be able to apply light governance. If we have a mechanism in place to re-hydrate the private data we should be ok.

Rarely will your analysts need SSNs to build a ML algorithm.

Step 5: Apply Decisions to Real-time Data

No v1 data science algorithm is perfect. What we need is to get something out-the-door with a constant feedback loop. In AI this is often called reinforcement learning. We were quite so bold, we merely wanted to put our predictions out there and retrain them as we had more data.

To do this the algorithms need to run in real-time. My clients analysts and data scientists are best-of-breed. All I needed to do was ingest the streamed data, run the algorithms, and report back the decision. This can be done using APIs and webhooks. The integration could be wherever the credit is needed:

- at the POS terminal

- as a payroll deduction

- etc

This was about a 3 month effort. Systems integration is the bulk of the time. Even with the advent of micro-services and the proliferation of APIs, this is still time-consuming.

Step 6: Iterate

The above processes took about 4 months. That’s a much faster time-to-market than any other consultancy could offer. In reality, after Month 2 my client was experimenting with data.

But we certainly weren’t done and couldn’t declare success yet. But we had an MVP (Minimum Viable Product) that we could go-to market with. That’s important. We could now plan MVP+1, MVP+2, etc. We had a yardtick that we could use to measure progress of future iterations.

Outcomes

Successful data projects are never finished.

For this project I got to build stuff, break stuff, and build more stuff. It’s not always smooth sailing and there were bumps in the road. I have an aphorism for this: Successful companies focus on INPUTS, failures focus on OUTPUTS. And that is the point. Data projects have always been risky, we merely want to inject best practices to mitigate as much of that risk as possible.

I, unfortunately, rarely get to see the end-state of my engagements. In most cases the customer picks up the processes and frameworks we use quickly and soon outgrows us. It’s great to see a client and their staff smiling and excited to be using our methods. At some point I have nothing else to offer a customer with nagging data problems other than staff aug. This client was on firm footing down a path to becoming a digital disruptor.

Engage with me for your next assignment ›

Thanks for reading. If you found this interesting please subscribe to my blog.

Related Posts

- Data Quality Doesn't Matter

- Forrester 2020 Enterprise Architecture Award Winner

- Case Study in End-to-End Self-Service Analytics in the Cloud

- How to run a Discovery Workshop

- Case Study: Data Analytics in FinServ

Dave Wentzel CONTENT

data science data lake data architecture case study