Gaining Information Edge with AltData

“Lift” is something every data scientist and business person strives for. Getting better data is one approach to adding value. Once you’ve added all of your internal, proprietary data to your analytics and you’ve transformed and massaged it all you can, you need to start looking for valuable datasets in non-traditional places. This is called altdata or alternative data. I am a Microsoft Technology Center data evangelist. Everyday I help forward-thinking companies leverage altdata to gain a profitable edge. Let me show you how I do it and give you some ideas of altdata that you can leverage in your business. We’ll look at multiple verticals and lines of business and … I’ll even let you in on what I think is the hottest alt-dataset secret that you’ll want to leverage.

Quick History

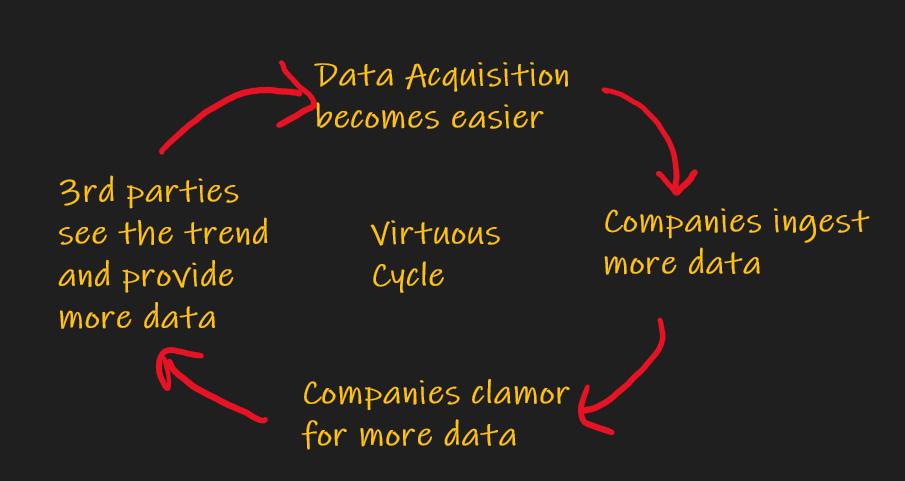

3rd party datasets have been around since the advent of data analytics. Within the last 10 years ingesting these datasets has been a lot easier and that’s led to an explosion of business people wanting to leverage this data. This has led to more companies selling their proprietary datasets (called data monetization). This virtuous cycle has attained critical mass recently.

3rd party datasets have been around since the advent of data analytics. Within the last 10 years ingesting these datasets has been a lot easier and that’s led to an explosion of business people wanting to leverage this data. This has led to more companies selling their proprietary datasets (called data monetization). This virtuous cycle has attained critical mass recently.

Probably the first wave of this most recent boom was ingesting Twitter data. Twitter provided a free API that was easy enough for anyone to do sentiment and trending analysis to help with things like marketing campaigns and product development.

The next dataset everyone wanted to explore was weather data. “How can I use weather to provide lift to my value stream?” I did a lot of small projects with customers who experimented with weather to predict sales or deal with supply chain issues. In almost every case weather provided zero lift for the initial intended use case. But here’s the thing, with modern data ingestion processes and data lake paradigms we could experiment with weather data in a matter of weeks without a big capital software project investment. We could fail-fast, cheaply.

The next dataset everyone wanted to explore was weather data. “How can I use weather to provide lift to my value stream?” I did a lot of small projects with customers who experimented with weather to predict sales or deal with supply chain issues. In almost every case weather provided zero lift for the initial intended use case. But here’s the thing, with modern data ingestion processes and data lake paradigms we could experiment with weather data in a matter of weeks without a big capital software project investment. We could fail-fast, cheaply.

But these projects were not failures. For many of my customers this was their first foray into using altdata and they quickly found out how easy it was to do. For years business analysts (BAs) were clamoring for IT to ingest 3rd party datasets but the effort was always huge, and so was  the backlog. With data lakes, ELT (vs ETL) paradigms, and EDA (exploratory data analytics), we could show that the turnaround for basic altdata ingestion to the lake was hours-to-days instead of weeks. Time-to-analytics was radically compressed. Suddenly BAs and data scientists were dreaming up all kinds of experiments with altdata. Even though the first use case for weather data generally failed, suddenly everyone was thinking up other use cases for weather and we already had the data flowing into the lake, in real-time, and it wasn’t difficult to experiment on subsequent use cases. This is anecdotal, but about 50% of subsequent use cases for weather data actually showed lift. This was encouraging.

the backlog. With data lakes, ELT (vs ETL) paradigms, and EDA (exploratory data analytics), we could show that the turnaround for basic altdata ingestion to the lake was hours-to-days instead of weeks. Time-to-analytics was radically compressed. Suddenly BAs and data scientists were dreaming up all kinds of experiments with altdata. Even though the first use case for weather data generally failed, suddenly everyone was thinking up other use cases for weather and we already had the data flowing into the lake, in real-time, and it wasn’t difficult to experiment on subsequent use cases. This is anecdotal, but about 50% of subsequent use cases for weather data actually showed lift. This was encouraging.

This is the pattern I see: the first foray into altdata isn’t a success, but it lays the foundation for future successes.

And, once you have a good pattern to follow to ingest raw altdata into your data lake, you’ll find that the marginal cost to ingest the next dataset is reduced. And if any of altdata experiments does provide lift to one use case it will likely provide lift to other use cases in other departments, at a near-zero marginal cost. But, their must be an organizational culture of data sharing, which I’ll talk about in Part 2 of this article.

How do you leverage altData

Leverage altdata by conducting experiments. You have to ingest the data quickly, preferably in real-time, and get it into the hands of the data scientists and analysts. You need a platform that can help you move quickly. The cloud, like Microsoft Azure, can help you do small experiments without needing a capital budget. The cloud is a utility…if your experiment doesn’t work, just turn it off.  Here’s a quick list of other tools you should be using or researching:

Here’s a quick list of other tools you should be using or researching:

- data lakes: these aren’t just an archive of csv files. A proper data lake is structured to allow different users access to the data without the data going through a formal data warehouse modeling and ETL exercise (which takes MONTHS). A good data lake will allow data scientists to use their preferred tools to analyze the data (python, Spark) while allowing business analysts to use their tools-of-choice (SQL or Power BI for instance).

- ELT tools with a connector ecosystem to many, many 3rd party datasets: Azure Data Factory is an ELT tool that allows an analyst with little formal data engineering training to ingest data into a data lake quickly. Never do ETL, always do ELT. Ingest the data with full fidelity to the data source. Don’t try to massage the data and clean it. Raw, dirty data has a lot of embedded

signalin thenoise. - APIs: most altdata sources still require you to pull data from an API. REST APIs and

odataare great for many things, but copying large amounts of data is not a strong suit. But we have to use what we are given. Ensure your have personnel that understand REST API patterns. - Real-time, streaming data: think of any data you purchase or ingest as having an expiration date. You need to ingest the data as quickly as possible. All data, even if it’s ingested as a batch csv processing on the 1st of every month, should be treated and handled as though it is constantly being generated. Like IoT data. If you understand IoT data ingestion patterns and treat all data like IoT data then all ingestion activities can follow the same pattern. This simplifies your architecture. KISS.

- data sharing concepts: This is where the altdata community is quickly headed. My next article will be devoted entirely to this concept.

As long as you understand the foundations of the basic concepts above you should be able to leverage altdata profitably.

Don’t get hung up on the technologies, it’s much more important to understand the patterns and processes.

Isn’t everyone doing this now? How do I get information edge?

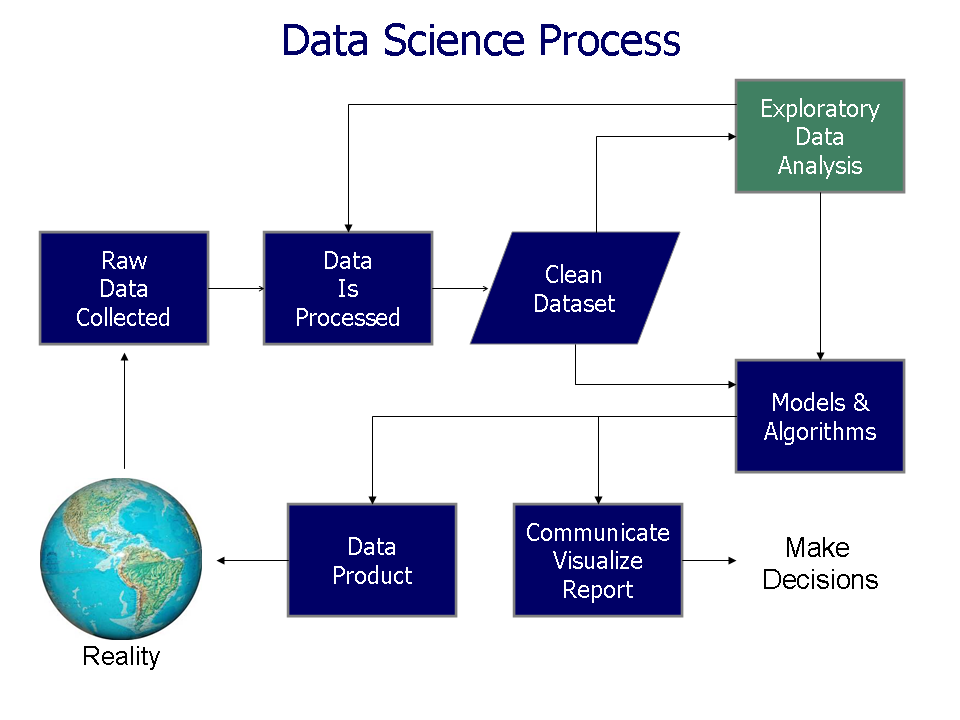

Certainly more and more companies are doing altdata experiments. But just ingesting data isn’t enough. Anyone can buy a dataset and do predictions but if everyone is buying that same dataset, where’s the competitive advantage?  You need to spend time working with the data. This is often called Exploratory Data Analysis(or EDA). We are looking for patterns in the data and applying statistical methods and visualizations to understand the data better.

You need to spend time working with the data. This is often called Exploratory Data Analysis(or EDA). We are looking for patterns in the data and applying statistical methods and visualizations to understand the data better.

Data scientists call this feature engineering and it gives you information edge. Data ingestion doesn’t magically provide lift, you need to find the valuable nuggets of gold.

What does a good altdata-set look like?

- Focus on datasets that you can get exclusive access to. This means your competition isn’t experimenting with it too. Remember, the raw altdata rarely provides competitive advantage. It is your analysts who will find the nuggets of gold after experimenting with it.

- Can you quickly use the altdata to semantically-enrich your existing data? If you can extract more value from the data than your competition, that’s a great altdata-set.

- How quickly can you leverage the data before it expires? If you are buying real-time datasets you better be able to leverage them quickly in the value stream.

- Can you get access to the altdata’s SMEs? Getting access to SMEs that have a deep knowledge of the data and domain is invaluable. Always ask your data vendor if you can get some of their SME’s valuable time and be prepared to ask them good questions.

I once consulted for a FinTech company that was blindly purchasing credit agency data on its customers. We received almost 2000 data points for each customer in a nightly feed. The dataset’s documentation was hundreds of pages. And I still couldn’t understand where to find value. So I got a hour of their SME’s time and I asked a very simple question: If you were me and were trying to provide lift to our company with this data, where would you start? What followed was a 2 hour conversation that we thankfully recorded and replayed MANY times.

Where should I inject altdata into my value stream?

Every business is different, but every business has what we call transactional data. Think of the transaction as what generates revenue. Assume you sell goods on an e-commerce website. Your transactional data is the record of the sales data when your customer submits an order. Generally the transaction in an e-commerce system starts when the user first lands on your homepage, navigates through some products, does a search, adds a product to the shopping cart, and checks out.

There is a LOT of data you likely haven’t captured about this transaction. Some examples:

- Who was the

referrerthat sent the customer to your website? A bing search? A google ad? If we knew this we could tailor our marketing efforts. - Can we attribute the sale to one of our existing marketing campaigns? This will tell us where to focus future campaigns.

- What do we know about this customer? Their interests, social media profiles, etc. This will give us a more holistic view of our customer.

- Can we follow up and get the customer’s sentiment on their experience with us? This will tell us what we should be doing in the future.

This is all peripheral data to the transaction event. We can’t capture this data easily in our e-commerce system, but we can find all of this information in altdata from other sources. This peripheral data likely adds the most lift.

Remember, it’s not the data itself that is valuable. It’s the patterns and insights gleaned from the data that adds the value.

Become Data-Driven at the MTC

Are you convinced that your company is ready to leverage altdata?

Most companies are taking steps to become more data-driven but still aren’t leveraging external datasets. Anecdotally, when I ask why the overwhelming responses are:

- “we don’t have the resources that can ingest the datasets our analysts want”

- “we don’t have analysts and processes that can leverage this data. There is no playbook on how to gain actionable insights from these data sources.”

I am a Microsoft Technology Center (MTC) Architect focused on data solutions. The MTCs are a service Microsoft offers to its customers and we strive to be their Trusted Advisors. We offer services where we can show you how to leverage altdata quickly with a fail-fast mentality. With data lakes, ELT paradigms, and Exploratory Data Analytics we can show your team the simple patterns that have been proven to work.

I am a Microsoft Technology Center (MTC) Architect focused on data solutions. The MTCs are a service Microsoft offers to its customers and we strive to be their Trusted Advisors. We offer services where we can show you how to leverage altdata quickly with a fail-fast mentality. With data lakes, ELT paradigms, and Exploratory Data Analytics we can show your team the simple patterns that have been proven to work.

Come to us with a problem you think might be a good use case for altdata. Within a few days we can build a rapid prototype and show you the Art of the Possible. We’ll show you what it takes to start a successful data initiative and we’ll help you solve problems in days that would’ve taken months just a few years ago.

Does that sound compelling? Contact me on LinkedIn and we’ll get you started on your altdata journey.

In Part 2 of this series we’ll look at how Data Sharing is revolutionizing altdata initiatives at many companies. In Part 3 we’ll look at some concrete use cases for altdata in different verticals.

Are you convinced your data or cloud project will be a success?

Most companies aren’t. I have lots of experience with these projects. I speak at conferences, host hackathon events, and am a prolific open source contributor. I love helping companies with Data problems. If that sounds like someone you can trust, contact me.

Thanks for reading. If you found this interesting please subscribe to my blog.

Related Posts

- Data Literacy Workshops

- Software Implementation Decision Calculus

- MTC Data Science-as-a-Service

- Top 10 Data Governance Anti-Patterns for Analytics

- The Dashboard is Dead, Probably?

Dave Wentzel CONTENT

data science Digital Transformation