Paradox of Unit Testing?

For the last half decade every one of my employers has tried to automate more and more of the QA work. True, for the small, less-than-5% of the automated tests, the code is entirely bug-free. However, a machine cannot automate and test bugs it doesn't know how to test for. Here's a well known story that you can google if you don't believe me. When M$ was developing the Vista release of Windows there was an internal management push to use automated testing tools. The reports indicated all of the tests passed. Yet, the public reception of Vista was less-than-stellar. Users felt the interface was inconsistent and unpolished and full of bugs. An interface becomes aesthetically pleasing when the interface has consistent patterns of behavior and look-and-feel. How do you automate testing of that? You don't. QA testers will only file bugs for those issues after they use features repeatedly and note the inconsistencies. These obvious problems (to a human) do not fit the definition of a bug by an automated testing tool. Taken in toto, these "bugs" led users to generally feel that Vista was inferior to XP.

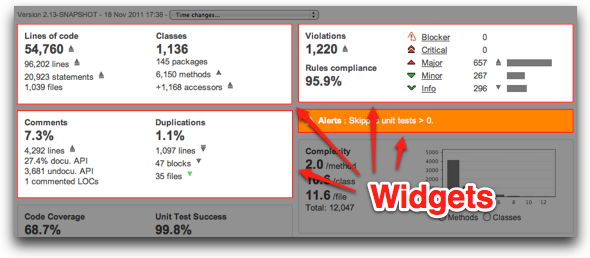

For the last half decade every one of my employers has tried to automate more and more of the QA work. True, for the small, less-than-5% of the automated tests, the code is entirely bug-free. However, a machine cannot automate and test bugs it doesn't know how to test for. Here's a well known story that you can google if you don't believe me. When M$ was developing the Vista release of Windows there was an internal management push to use automated testing tools. The reports indicated all of the tests passed. Yet, the public reception of Vista was less-than-stellar. Users felt the interface was inconsistent and unpolished and full of bugs. An interface becomes aesthetically pleasing when the interface has consistent patterns of behavior and look-and-feel. How do you automate testing of that? You don't. QA testers will only file bugs for those issues after they use features repeatedly and note the inconsistencies. These obvious problems (to a human) do not fit the definition of a bug by an automated testing tool. Taken in toto, these "bugs" led users to generally feel that Vista was inferior to XP.  This is a family blog but occassional blasphemy is necessary when something is too egregious. Sonar is code quality management software. Sonar can tell you, for instance, what Java classes have unit tests and how many code branches have ZERO tests. It can then drill-down into your code and determine where the bugs are given the technical debt. This is less-than-perfect, but it gives you a good feel for where your bugs may be and I'm all for that. It's another tool in the toolbelt.

This is a family blog but occassional blasphemy is necessary when something is too egregious. Sonar is code quality management software. Sonar can tell you, for instance, what Java classes have unit tests and how many code branches have ZERO tests. It can then drill-down into your code and determine where the bugs are given the technical debt. This is less-than-perfect, but it gives you a good feel for where your bugs may be and I'm all for that. It's another tool in the toolbelt.  The problem is the tool gets a bit cute with its management reporting capabilities. For instance, let's say your "Code Coverage" is a measly 7% (ie, 7% of your code has identifiable unit tests). Is that bad? If I was management, I'd be pissed. The fact is, you don't need to unit test EVERY line of code. Do you need to ASSERT that an "if" statement can evaluate a binary proposition and correctly switch code paths? I think not. If we needed a formal junit test for every line of code our projects would be even further behind.

The problem is the tool gets a bit cute with its management reporting capabilities. For instance, let's say your "Code Coverage" is a measly 7% (ie, 7% of your code has identifiable unit tests). Is that bad? If I was management, I'd be pissed. The fact is, you don't need to unit test EVERY line of code. Do you need to ASSERT that an "if" statement can evaluate a binary proposition and correctly switch code paths? I think not. If we needed a formal junit test for every line of code our projects would be even further behind. The solution to software quality problems is simple. There is management and there are coders. Coders want to solve software quality by adding more software...automation tools, TDD, more unit tests. These tools are meant to prove to management that software is bug-free. But how do you prove definitively that software is totally bug-free? You can't. The logic we coders use is flawed.

Management doesn't care about any of this. They want:

Management doesn't care about any of this. They want:

- more feature functionality

- less bugs

- faster to market

- cheaper

Management couldn't care less (or shouldn't) about TDD or dynamic logic code or more formal testing. (But they do like the pretty Sonar graphs that they can use to show that their developers are lunk heads). But management does understand good economics and the Law of Diminishing Returns. If we focus on tracking down and fixing every last bug we may get better quality, but we will have lost in the marketplace.

Good management knows that the goal is not bug-free software, the goal is software that has just few enough bugs that a sucker, er customer, will spend money on it. Many coders don't realize this or have forgotten it. Stop worrying about endless testing.

What is the best way for a coder to test her code?

Given that you believe me and my contention that we test too much, what is the ONE thing we absolutely should do as developers to affect quality?

Given that you believe me and my contention that we test too much, what is the ONE thing we absolutely should do as developers to affect quality?

Code reviews

There is no substitute. I have spent 10 minutes looking at another person's code and have pulled out handfuls of bugs. This is code that had full unit tests. Likewise, I've had other, "junior" level people code review my work and within 10 minutes they've spotted bugs that I thought I had test coverage for.

Always code review.

Dave Wentzel CONTENT

data architecture tsqlt